Human in the Loop for Zapier Automations

Add human review and approval steps to your AI-powered Zapier workflows

EnforcedFlow’s Human in the Loop is targeted towards generative AI workflows. This new action let a human review an automatically generated output before continuing the process to ensure accuracy, compliance, and accountability in automated workflows.

For example take a Zapier automation that generates a personalized email using OpenAI. With Human in The Loop, a human can review to make sure the content is correct or fine tune before sending it to the customer.

How it works

To setup a workflow similar to above leveraging human in the loop extension you will have to setup two automations. One to generate the content for the email and send to approval, another one to send the email to customer after approving. It’s straightforward to setup.

Getting started

Step 1: Enable EnforcedFlow extension for Zapier

When setting up your Zap, search for EnforcedFlow in the Zapier app directory to get started.

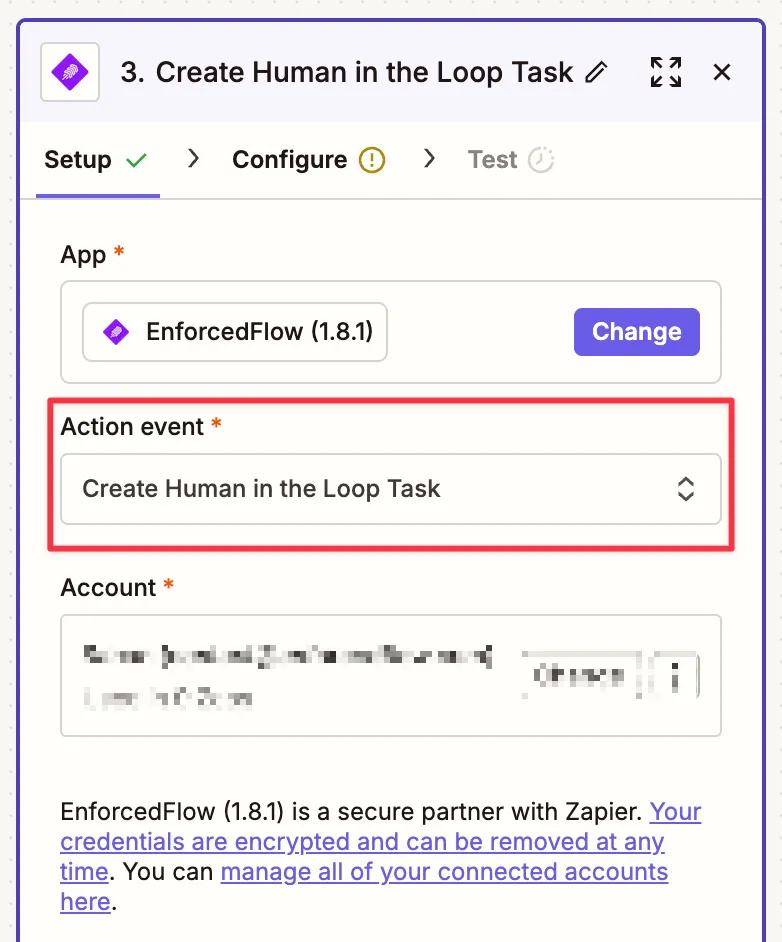

Select New Human in the Loop Task as the action event.

Step 2: Setup the action to generate content and send to a human

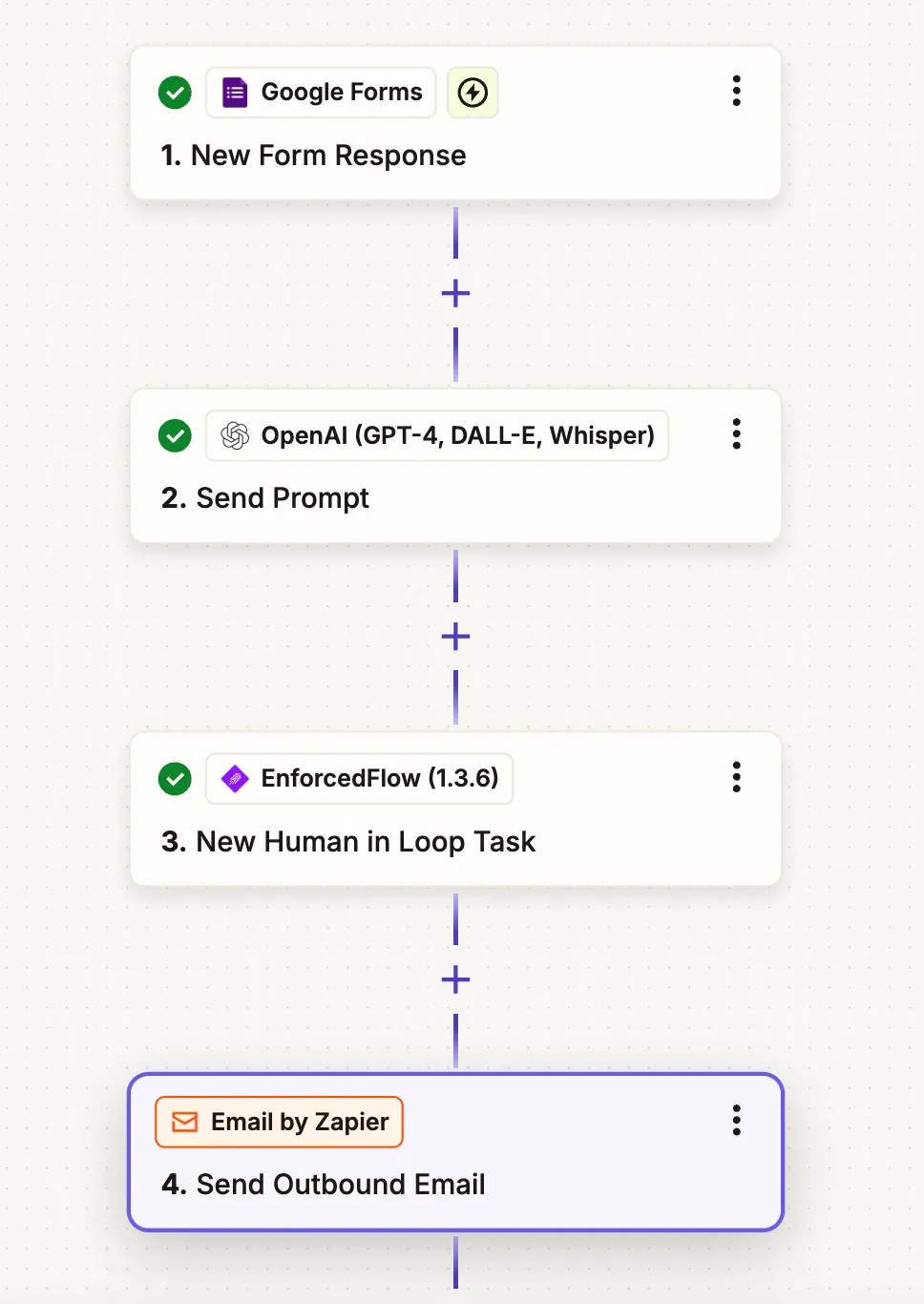

The first part of the setup looks like below.

- The trigger listens to new google form submissions.

- Information from the form is then sent to OpenAI to craft an email response.

- Create a new task for a human to review. This action will return a unique link to share with the reviewer..

- Link is emailed to the reviewer.

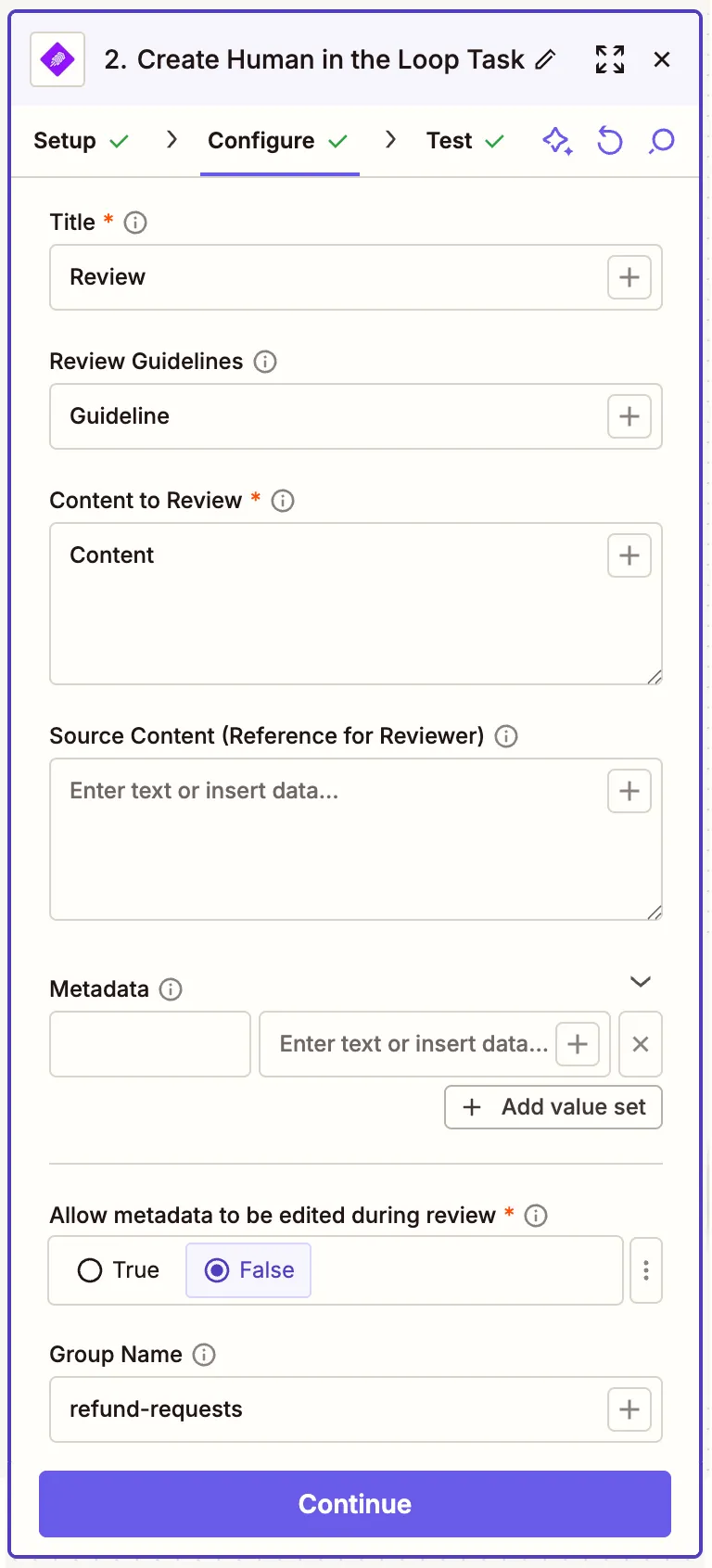

The configuration options for New Human in Loop task take,

- Title (Required): The title that will be displayed at the top of the review page. This helps reviewers understand what they’re reviewing at a glance.

- Review Guidelines (Optional): General instructions or guidelines for the reviewer to follow. For example, you might specify “Match our brand tone and voice”, “Ensure all facts are accurate”, or “Keep the response under 500 words”. These guidelines appear prominently at the top of every review to guide the reviewer’s work.

- Content to Review (Required): The actual content that needs to be reviewed and potentially edited. This is the main text that the reviewer will work with during the review process.

- Source Content (Reference for Reviewer) (Optional): Contextual information that helps the reviewer understand the background or origin of the content being reviewed. This is particularly useful when reviewing AI-generated responses or summaries. For example, if you’re reviewing an AI-generated customer support response, you would put the customer’s original question here. If reviewing a summary, you would include the source article. This reference material helps reviewers make better decisions but is kept separate from the content being reviewed.

- Metadata (Optional): Custom data that you want to pass through the workflow but don’t need to show to the reviewer. This is submitted as key-value pairs (like customer_email: “john@example.com” or ticket_id: “12345”). The metadata you provide here will be returned to you in the “Human in Loop Task Reviewed” trigger once the review is complete, allowing you to continue your automation with the necessary context. For example, you might pass the customer’s email address or order ID so you can use it in subsequent workflow steps after the review is done.

- Allow metadata to be edited during review (Required): Controls whether the reviewer can see and modify the metadata values during the review process. Set to “No” (default) to keep metadata hidden from reviewers, or “Yes” if you want reviewers to be able to update these values.

- Group Name (Optional): A unique identifier that allows you to organize and separate different types of review tasks. When you set a group name (like “blog-posts” or “support-emails”), only the corresponding “Human in Loop Task Reviewed” trigger with the matching group name will receive events from this action. This is useful when you have multiple review workflows running in parallel and want to ensure each type of task only triggers its specific follow-up actions. Additionally, grouping allows you to process all pending tasks in that group at once. Without a group name, all review completions will trigger all “Human in Loop Task Reviewed” triggers.

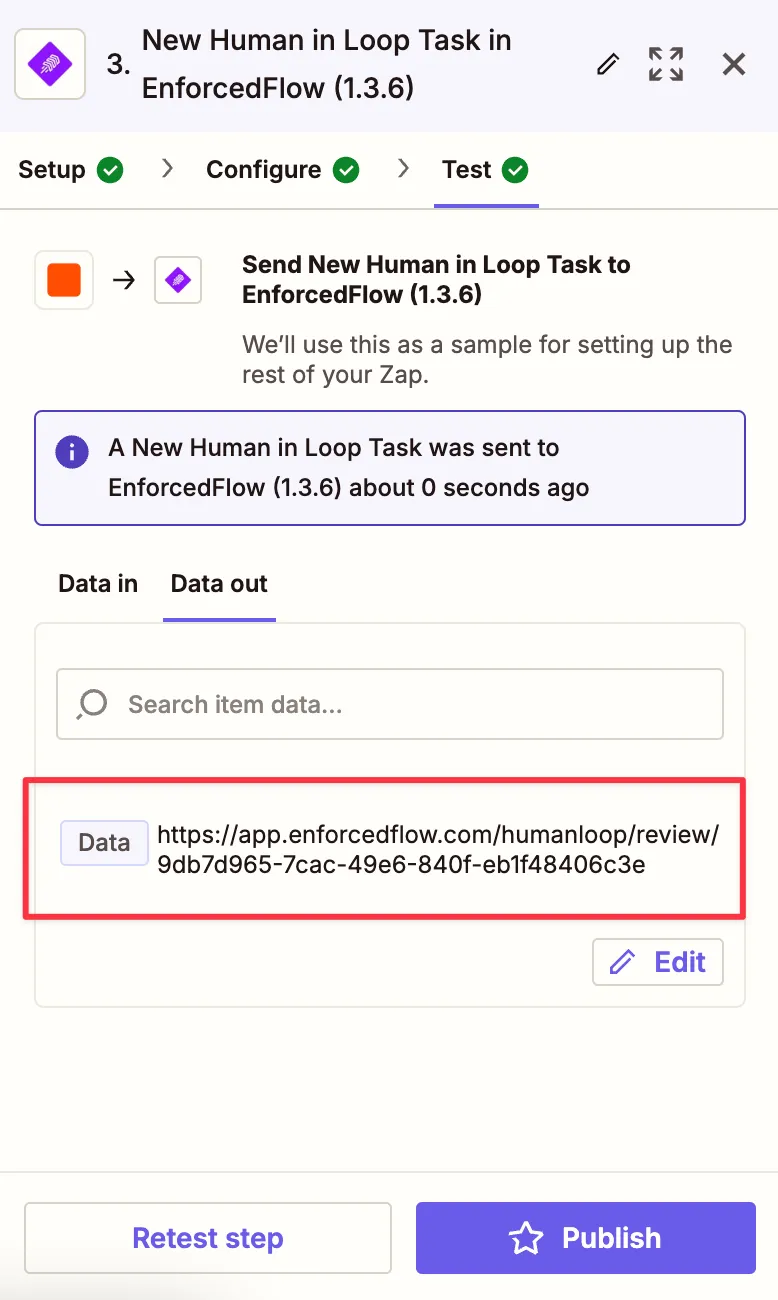

The output from the new human in the loop task is a url. A human can visit this url in a browser to review the content.

The output could be emailed or sent through Slack to a reviewer, or even create a task in Clickup as a todo.

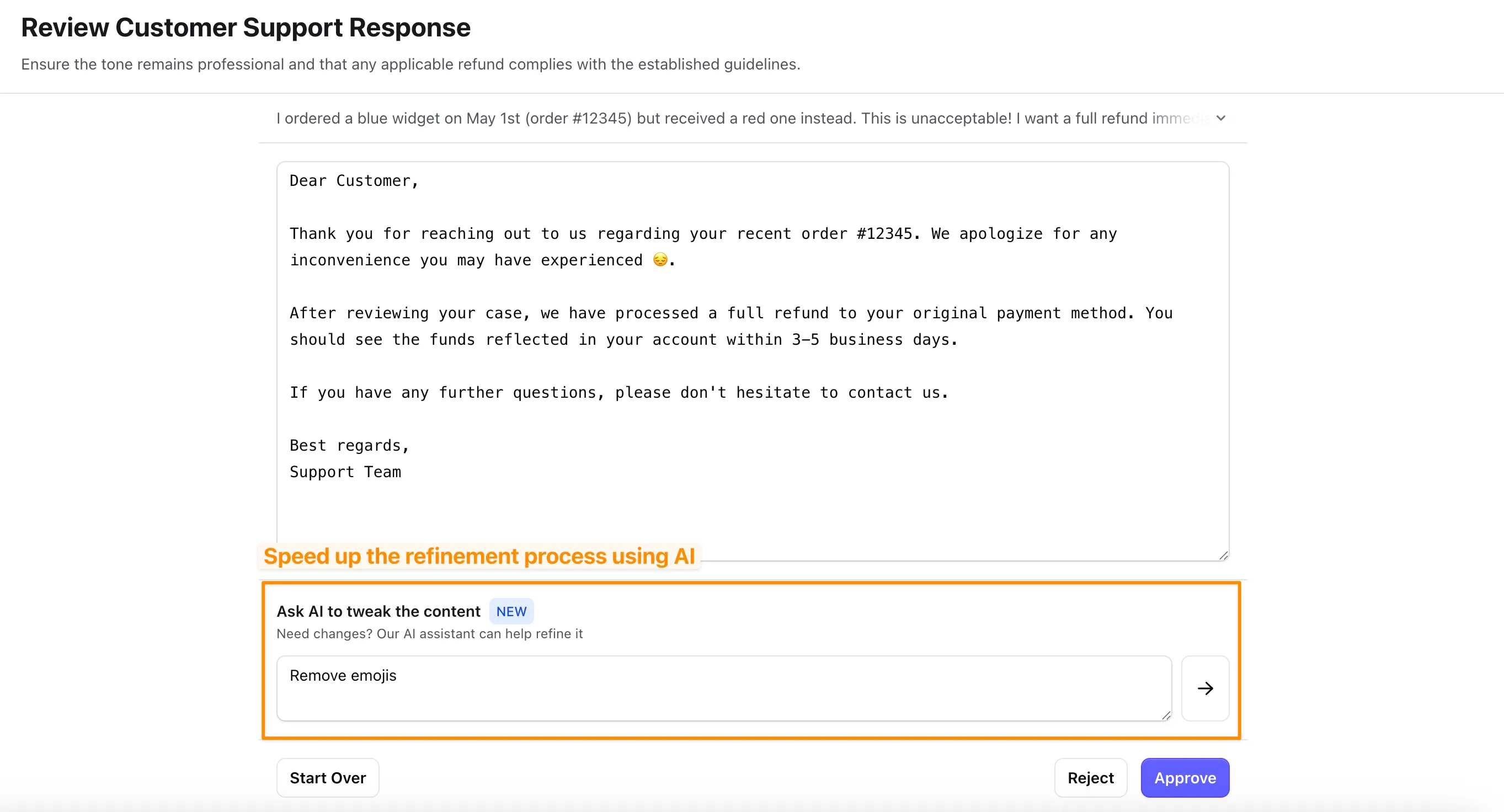

This is what reviewer will see when visiting the url. The content is editable. Upon approval or rejection, Zapier will trigger ‘Human in the Loop Task Reviewed’. This is the second part of the setup

Step 3: Setup the trigger to take action after a human review the content

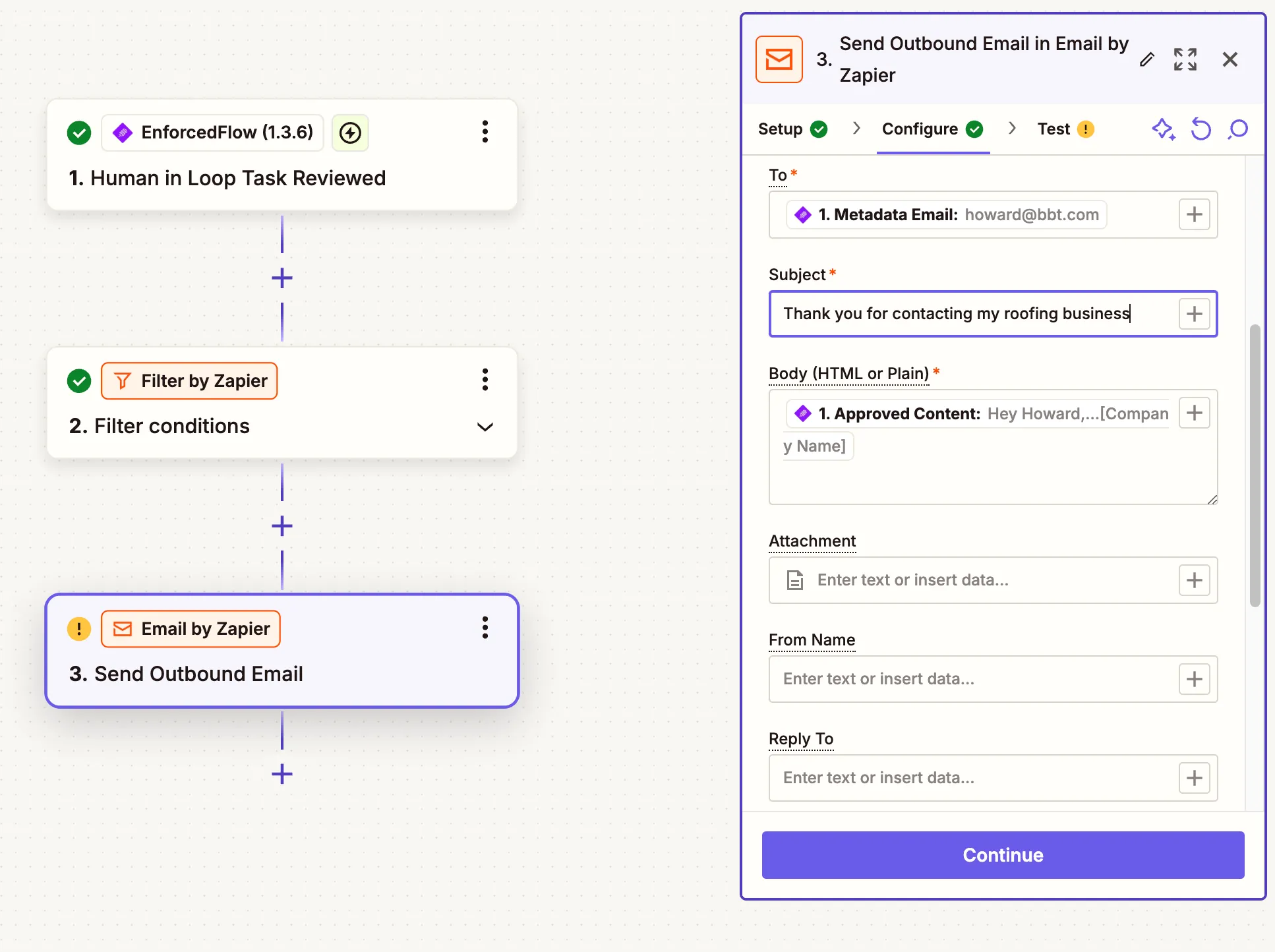

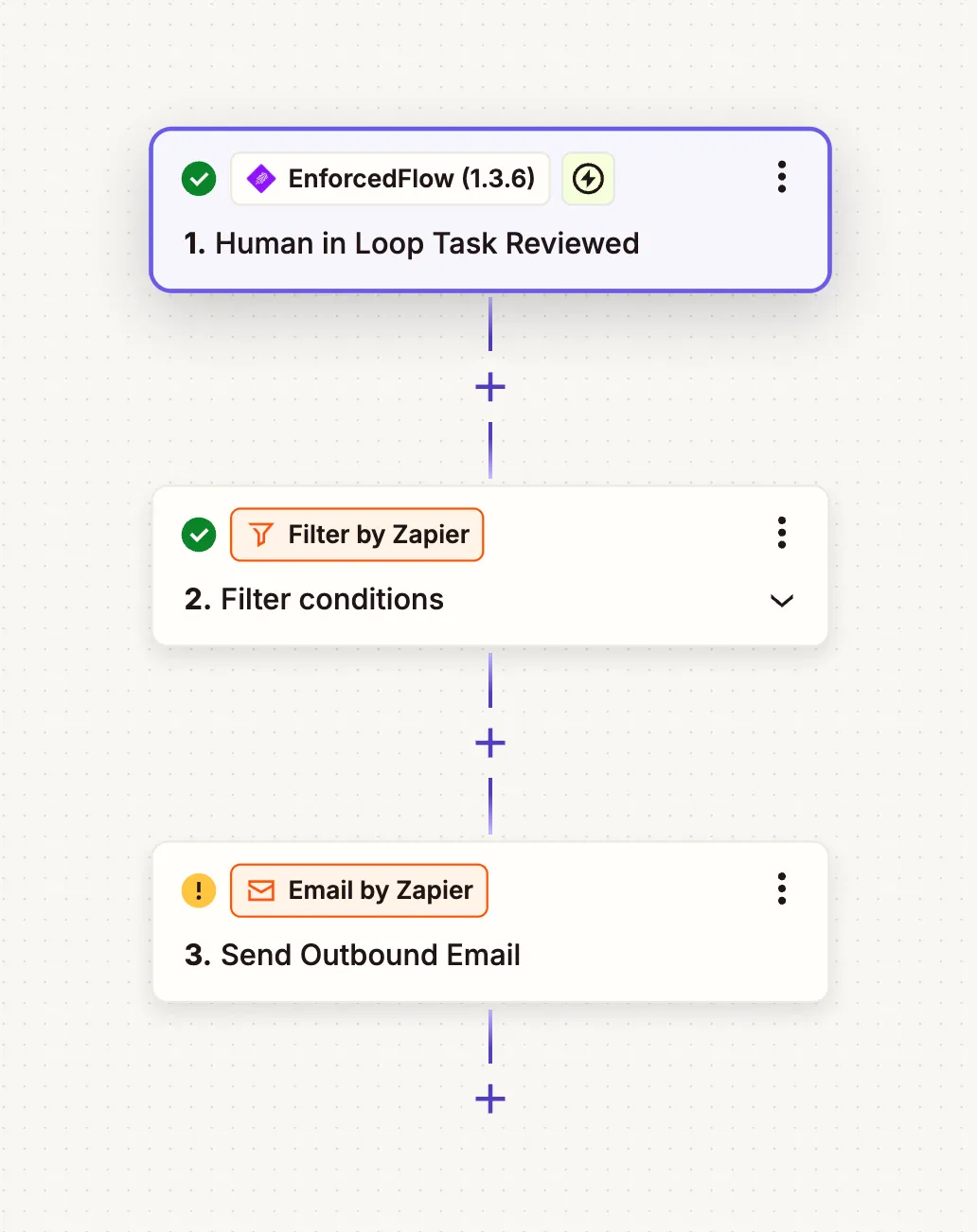

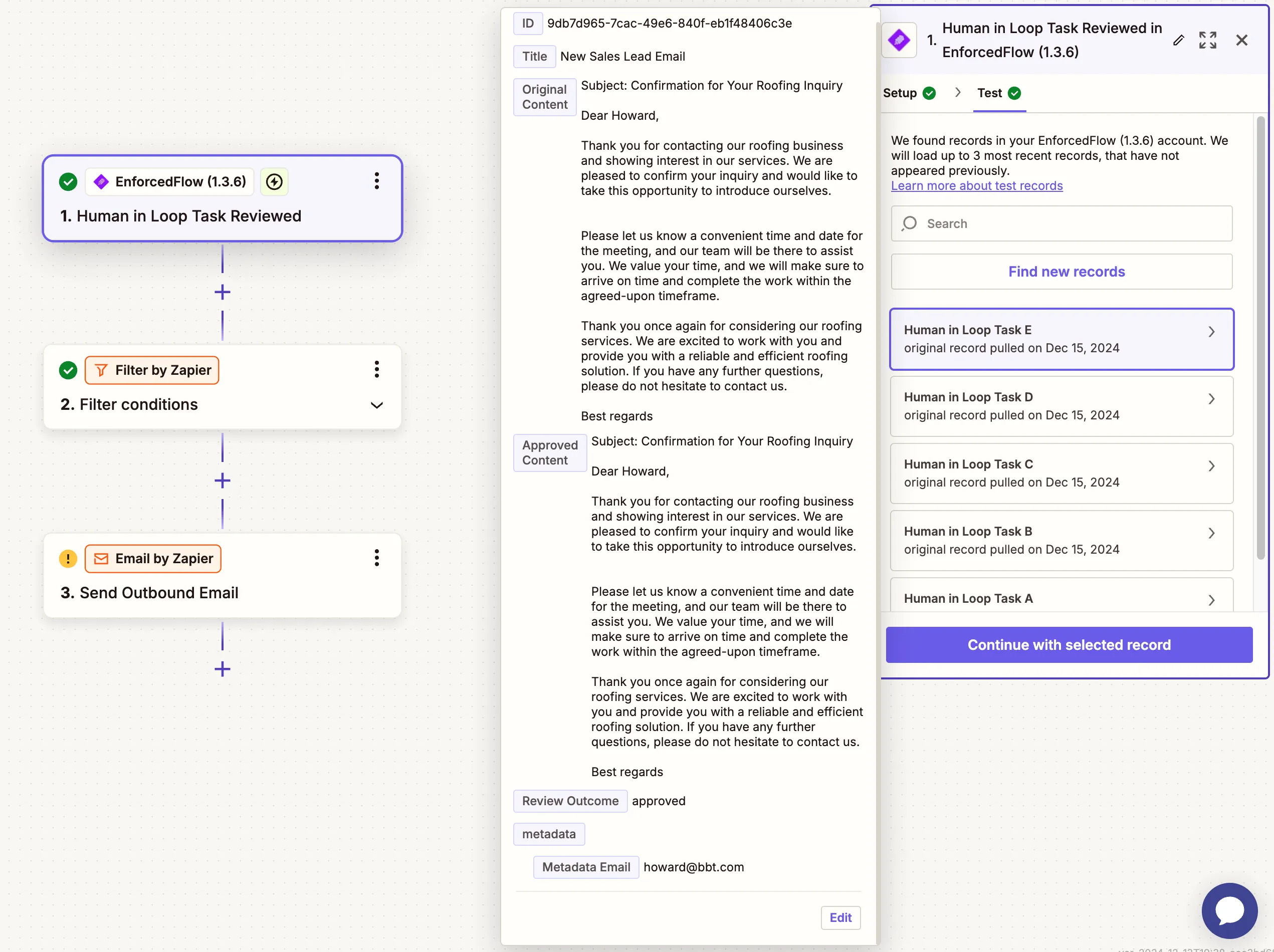

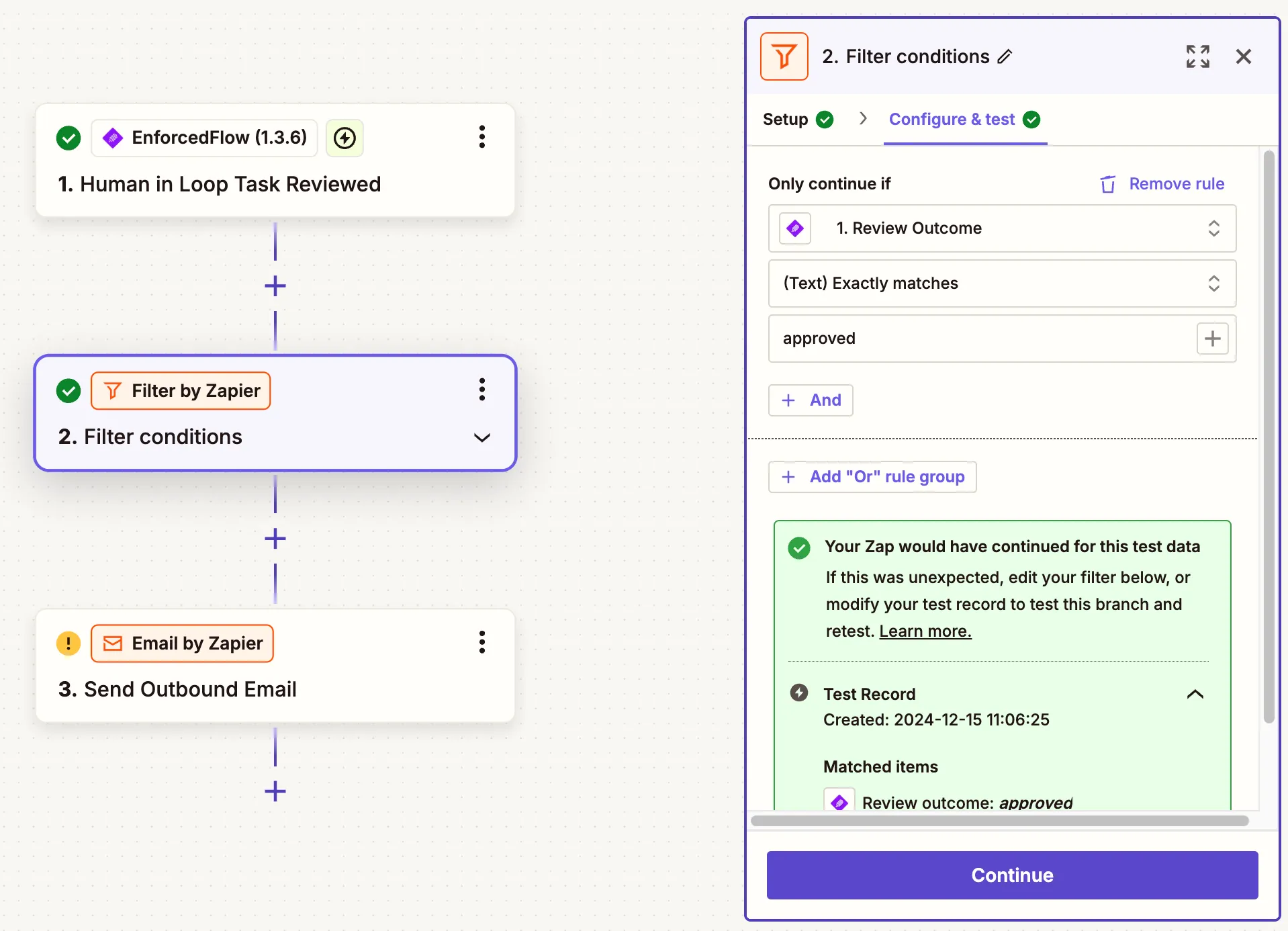

To handle reviewed content we’ll need to create another automation. For the example shared in the beginning I’ve setup it up as below,

- Using “Human in Loop Task Reviewed” trigger from EnforcedFlow

- A filter condition to make sure only approved ones are processed.

- Send the email to customer

Configuring the trigger

As you can see in below screenshot, the trigger receive the information from first automation upon a review is complete. The trigger get below information,

- An ID (used by EnforcedFlow)

- Title: The title used to create the task

- Original content: The content used in task creation

- Approved content: The content after approval. If the reviewer doesn’t change anything this will be same as original content

- Review outcome: Outcome of the review, approved or rejected

- Metadata: List of metadata, you can see the email passed in first automation has been sent again

Step 4: Filtering approved ones

Using a Zapier filter step I am making sure only approved ones are processed. Additionally you can setup an if/else or a completely separate automation to handle rejected ones.

Step 5: Sending the email

Finally, sending the email to customer. For the to field I am using the metadata -> Email field. Approved Content is used for the body of email.